I started 2025 with a familiar problem: too many delivery commitments, not enough uninterrupted build time, and a growing gap between ideas and shipped outcomes. Cursor AI became the leverage point—not as a novelty, but as an operating system for day-to-day product development.

Cursor didn’t just speed up my coding—it changed how I planned, shipped, and iterated.

The Problem

As projects scaled, the bottlenecks weren’t only technical—they were operational:

- Context switching across features, fixes, and refactors

- Slow iteration loops between decision → implementation → validation

- Uneven productivity driven by time fragmentation

Goals

The year had clear, business-oriented objectives:

- Increase shipping velocity without increasing burnout risk

- Reduce cycle time from idea to working implementation

- Standardize a repeatable workflow for complex tasks

- Improve quality through faster feedback and tighter iteration

Process

I approached Cursor AI like an adoption program, not a tool trial:

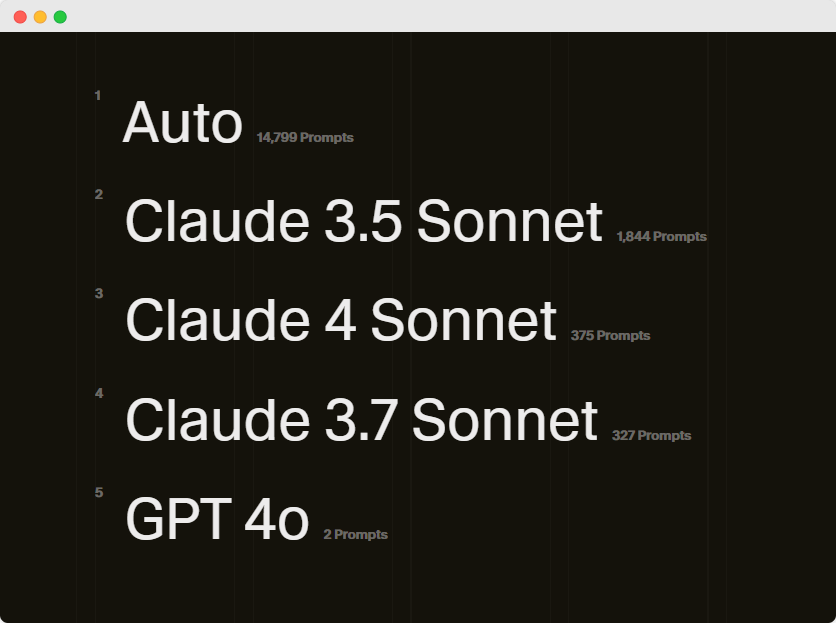

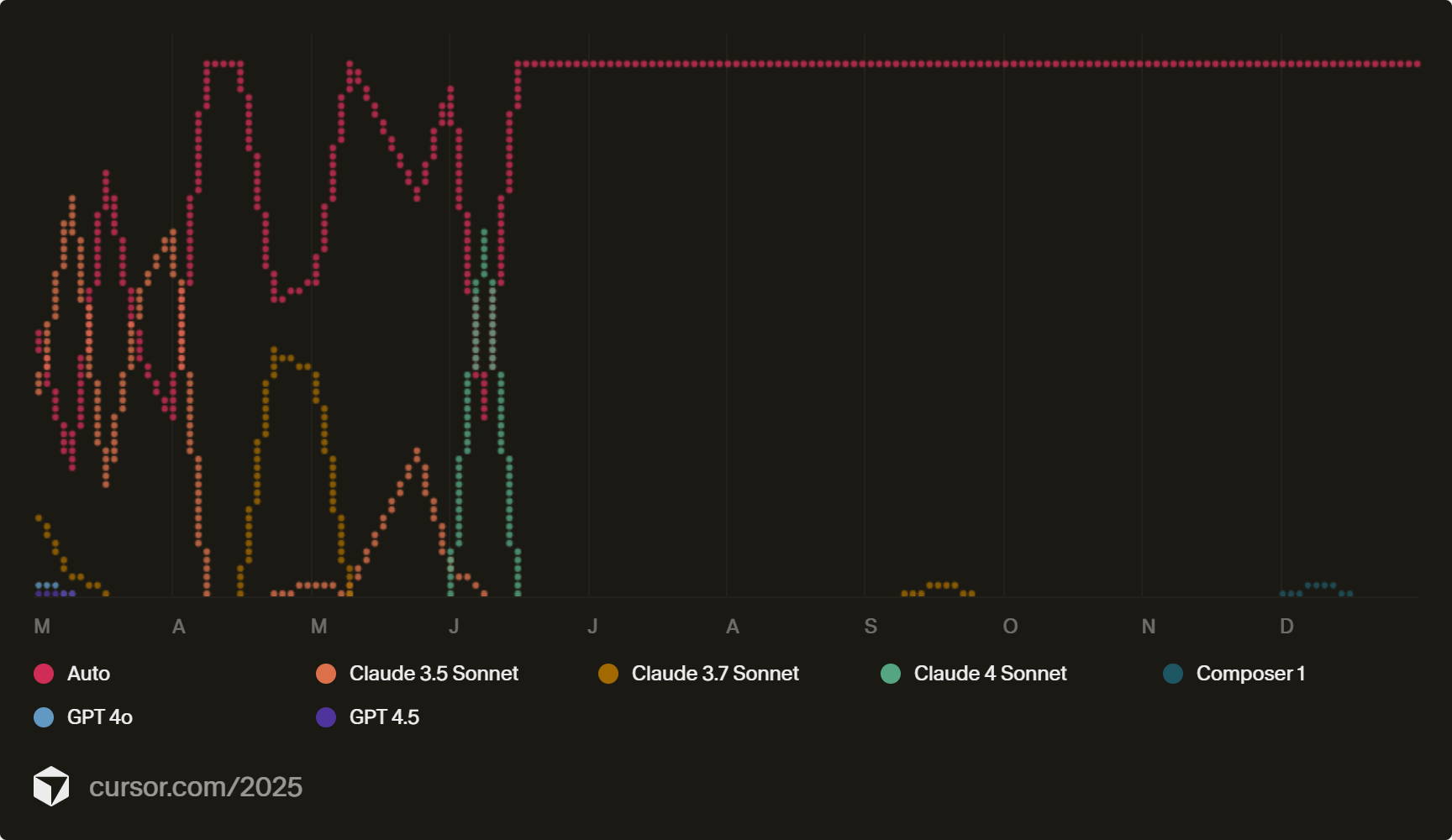

- Experimentation phase: Tested multiple models early to understand strengths across tasks (generation, refactoring, debugging, planning).

- Workflow phase: Shifted from one-off prompts to reusable, multi-step agent workflows for repeatable development patterns.

- Optimization phase: Consolidated into Auto routing to reduce decision overhead and keep focus on delivery.

Key UI/UX and Workflow Design Decisions

- Inline-first building: Prioritized tab-based completions for flow-state work and faster micro-decisions.

- Agent orchestration: Used agents for multi-step implementation tasks to reduce cognitive load and preserve momentum.

- Outcome-led prompting: Framed requests around business intent (user value, acceptance criteria, risks) rather than code-first instructions.

Technical Implementation (High-Level)

The implementation focus was practical and lightweight:

- Agent-driven task decomposition (plan → execute → validate)

- Structured prompts for repeatable workflows (requirements, constraints, definition of done)

- Model selection increasingly abstracted via Auto routing to optimize reliability and speed

Challenges

- Over-reliance risk: Avoiding “AI autopilot” required deliberate checkpoints for UX, edge cases, and product intent.

- Quality control at scale: High throughput demanded stricter review habits and clearer acceptance criteria.

- Consistency: Standardizing prompts and agent patterns was essential to keep outputs predictable.

Measurable Results

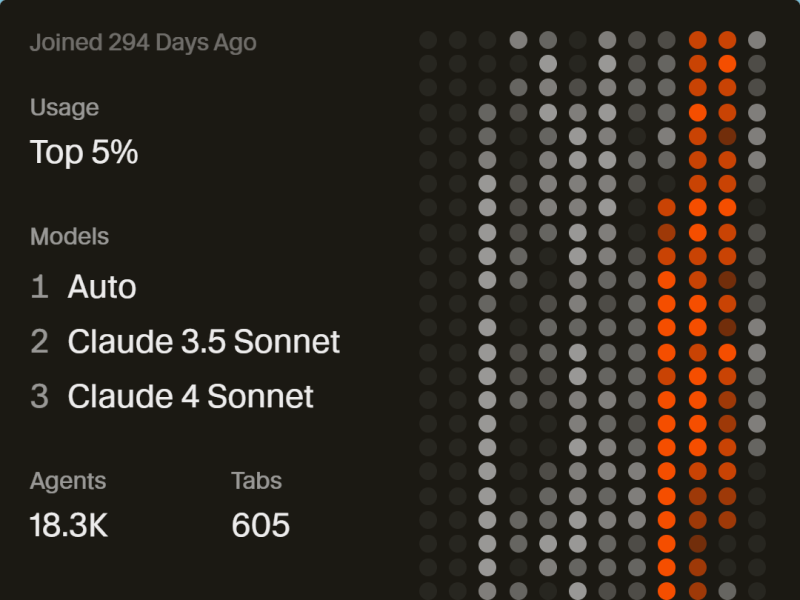

By year-end, the usage data reflected a meaningful workflow transformation:

- Top 5% usage with 262 active days and a 75-day longest streak

- 18,309 agent messages across 3,165 chats, indicating sustained, iterative delivery

- ~4.95B tokens used, signaling high-volume development cycles and large-context work

- Model strategy converged toward Auto as the dominant mode, reducing friction and increasing repeatability

The net effect was a shift from “working harder” to “operating smarter,” turning AI assistance into a scalable delivery habit rather than an occasional accelerator.